Scalable JSON processing using fs/promises, Async and Oboe

I’m working on an OSS project called AppMap for VS Code which records execution traces of test cases and running programs. It emits JSON files, which can then be used to automatically create dependency maps, execution trace diagrams, and other interactive diagrams which are invaluable for navigating large code bases. Here’s an example using Solidus, an open source eCommerce app with over 23,000 commits!

Each AppMap file can range from several kilobytes up to 10MB. AppMap has been used with projects up to 1 million lines of code, and over 5,000 test cases (each test case produces an AppMap). You can imagine, a lot of JSON is generated! I’m working on a new feature that uses AppMap to compare the architecture of two different versions of an app, so I need to efficiently process a lot of JSON as quickly as possible.

In this article I’m going to present a few of the obstacles I encountered while processing all this JSON using Node.js, and how I resolved them.

## Getting Asynchronous

Let’s start with the basics. The built-in asynchronous nature of JavaScript means that our programs can do useful work with the CPU while simultaneously performing I/O. In other words, while the computer is communicating with the network or filesystem (an operation which doesn't keep the CPU busy), the CPU can be cranking away on parsing JSON, animating cat GIFs, or whatever.

To do this in JavaScript, we don't really need to do anything special, we just need to decide *how* we want to do it. Back in the day, there was only one choice: callback functions. This approach was computationally efficient, but by default the code quickly became unreadable. JavaScript developers had a name for this: “callback hell”. These days, the programming model has been simplified with [Promises](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Promise), `async` and `await`. In addition, the built-in `fs` module has been enhanced with a Promises-based equivalent, `fs/promises`. So, my code uses `fs/promises` with `async` and `await`, and it reads pretty well.

**`loadAppMaps`**

```javascript

const fsp = require('fs').promises;

// Recursively load appmap.json files in a directory, invoking

// a callback function for each one. This function does not return

// until all the files have been read. That way, the client code

// knows when it's safe to proceed.

async function loadAppMaps(directory, fn) {

const files = await fsp.readdir(directory);

await Promise.all(

files

.filter((file) => file !== '.' && file !== '..')

.map(async function (file) {

const path = joinPath(directory, file);

const stat = await fsp.stat(path);

if (stat.isDirectory()) {

await loadAppMaps(path, fn);

}

if (file.endsWith('.appmap.json')) {

const appmap = JSON.parse(await fsp.readFile(filePath));

fn(appmap);

}

})

);

}

```

**Bonus material: A note about `Promise.all` and `Array.map`**

An `async` function always returns a Promise, even if nothing asynchronous actually happens inside of it. Therefore, `anArray.map(async function() {})` returns an Array of Promises. So, `await Promise.all(anArray.map(async function() {}))` will wait for all the items in anArray to be processed. Don't try this with `forEach`! Here's a [Dev.to article all about it](https://dev.to/jamesliudotcc/how-to-use-async-await-with-map-and-promise-all-1gb5).

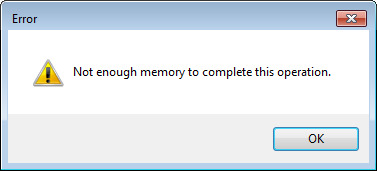

Asynchronous processing is so ubiquitous in JavaScript that it would easy to think there’s no downside. But consider what happens in my program when there are thousands of large AppMap files. Is a synchronous world, each file would be processed one by one. It would be slow, but the maximum memory required by the program would simply be proportional to the largest JSON file. Not so in JavaScript! My code permits, even encourages, JavaScript to load all of those files into memory **at the same time**. No bueno.

What to do? Well, I had to do some actual work in order to manage memory utilization. Disappointing, in 2021, but necessary. (Kidding!)

## Keeping a lid on things, with `Async`

When I was writing an LDAP server in Node.js back in 2014 (true story), there was this neat little library called [Async](https://caolan.github.io/async/v3/). This was before the JavaScript Array class had helpful methods like `map`, `reduce`, `every`, so Async featured prominently in my LDAP server. Async may not be as essential now as it used to be, but it has a very useful method [`mapLimit(collection, limit, callback)`](https://caolan.github.io/async/v3/docs.html#mapLimit). `mapLimit` is like `Array.map`, but it runs a maximum of `limit` async operations at a time.

To introduce `mapLimit`, most of `loadAppMaps` was moved into `listAppMapFiles`. `loadAppMaps` became:

```javascript

async function loadAppMaps(directory) {

const appMapFiles = [];

await listAppMapFiles(directory, (file) => {

appMapFiles.push(file);

});

return asyncUtils.mapLimit(

appMapFiles,

5,

async function (filePath) {

return JSON.parse(await fsp.readFile(filePath))

}

)

);

}

```

Loading 5 files concurrently seems like enough to get the benefits of async processing, without having to worry about running out of memory. Especially after the next optimization...

## Parsing just what's needed, with Oboe.js

I mentioned that I'm computing the "diff" between two large directories of AppMap Data. As it happens, I don't always need to read everything that's in an AppMap JSON file; sometimes, I only need the "metadata".

Each AppMap [looks like this](https://github.com/getappmap/appmap#file-structure):

```yaml

{

"version": "1.0",

"metadata": { ... a few kb ... },

"class_map": { ... a MB or so... },

"events": [ potentially a huge number of things ]

}

```

Almost all of the data is stored under the `events` key, but we only need the `metadata`. Enter:

[](http://oboejs.com/)

Streaming means, in this case, "a bit at a time".

The [Oboe.js API](http://oboejs.com/api) has two features that were useful to me:

1. You can register to be notified on just the JSON object keys that you want.

2. You can terminate the parsing early once you have what you need.

The first feature makes the programming model pretty simple, and the second feature saves program execution time. The streaming nature of it ensures that it will use much less memory than `JSON.parse`, because Oboe.js will not actually load the entire JSON object into memory (unless you force it to).

My use of Oboe looks something like this:

```javascript

function streamingLoad(fileName, metadata) {

return new Promise(function (resolve, reject) {

oboe(createReadStream(fileName))

.on('node', 'metadata', function (node) {

metadata[fileName] = node;

// We're done!

this.abort();

resolve();

})

.fail(reject);

}

```

## Wrap-up

So, that's the story. To recap:

- `fs/promises` gives you a nice modern interface to Node.js `fs`.

- `Async.mapLimit` prevents too much data from being loaded into memory at the same time.

- `Oboe` is a streaming JSON parser, so we never have the whole document loaded into memory.

I haven't optimized this for speed yet. My main concern was making sure that I didn't run out of memory. When I profile this, if I find any useful performance speedups, I will write those up to. You can follow me on this site to be notified of future articles!

## While you're here...

**State of Architecture Quality Survey**

My startup [AppMap](https://appmap.io/) is conducting a survey about software architecture quality. To participate in the survey, visit the [State of Software Architecture Quality Survey](https://www.surveymonkey.com/r/archsurveygeneral). Thanks!

***

***

*Originally posted on [Dev.to](https://dev.to/kgilpin/scalable-json-processing-using-fs-promises-async-and-oboe-4den)*

{: style="color:gray; text-align: center;" :}