If you’re using the Forem mobile app, you may have gotten a sequence of unexpected mobile notifications.

Now, I love dev.to, but I didn’t understand why I got these notifications because I wasn’t involved in any of these threads! Well, an email from the Forem team (the open source code behind dev.to) a couple of days later cleared it up - a coding mistake had accidentally notified a small portion of users about comments they hadn’t subscribed to. The disclosure was prompt and even included a link to the commit that fixed the problem. This is awesome, because usually when things like this happen, companies go into “cover your ass” mode and their disclosures don’t include any technical details. That makes it hard to study how these types of problems can be prevented. So, first of all, kudos to the Forem dev team!

I have a deep interest in improving developer tools and processes to prevent more errors like this from happening. Some types of errors, like buffer overflows and SQL injection, are somewhat amenable to automatic detection by static analysis. But coding logic errors like this one are definitely not.

So, if these problems can’t be found by static analyzers, how can they be found? Well, there are really only two ways - code review and testing. Getting extra eyes on the code sure is a lot better than not doing it - especially for tricky bugs like this one. And testing is important - especially automated tests - but problems like this one are difficult to catch because, for starters, you have to test the code with multiple different user profiles created and active at the same time. Forem has extensive test coverage - over 5,000 tests at my last count. But the fact that this bug happened is evidence that we, as a profession, need more effective ways to catch and fix problems like this one before they slip into production.

At this point, I’ll get a little bit more opinionated about what can be done. I’ve said that static analysis can’t help us here. I’ve also said that aside from automated tests, code review is our best tool for ensuring code quality. Code review isn’t perfect - I’ll be the first to agree. It’s hard to do an in-depth code review, because code is complex, and there’s a lot of pressure to approve the PR and keep the features flowing.

But I don’t think that hasty code review or pressure was the primary contributing factor in this case - or in many other cases. The Forem PR was reviewed and approved by senior developers, and co-authored by the head of engineering. Nope - I think the biggest flaw with code review in cases like this is that the traditional line-by-line diff of the code doesn’t make problems obvious enough.

I’ve written previously about code observability, and I think this particular situation is a perfect illustration of what code observability is all about - making the behavior of the code obvious, so that we don’t have to do mental gymnastics while reviewing code.

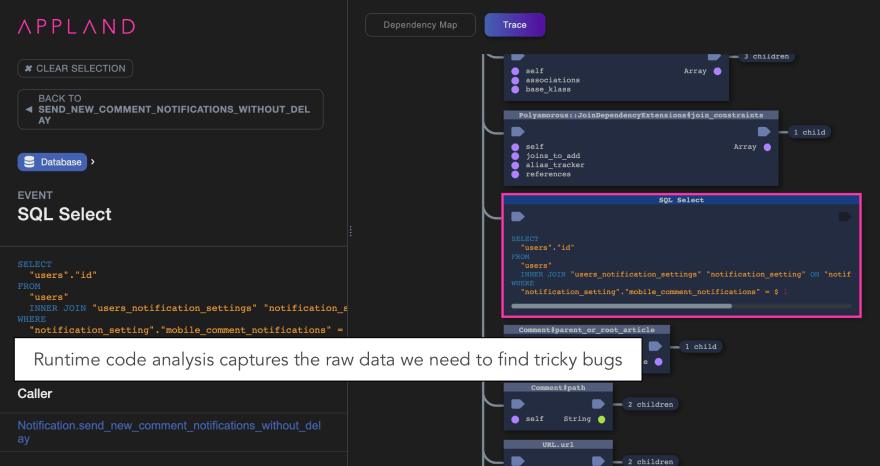

To make code behavior obvious, we need tools that observe and report on the code as it truly behaves, as it runs: runtime code analysis. If you combine runtime code analysis, code observability, and code review, what do you get? Presentations, visualizations, or views of code that make the behavior of code more obvious.

So here’s the nut: The reason why coding mistakes that lead to problems like the Forem notification bug are so hard to spot is that the behavior of the code is obfuscated, rather than revealed, by the way we choose to view the code. Want to catch more flaws in code review? Capture information about code behavior using runtime code analysis, and then process this data into views of code behavior, design, and architecture that make problems easier to spot.

To review:

- Code review is our last line of defense for catching tricky bugs before they ship.

- By their nature, tricky bugs are hard to spot from a basic line by line code diff.

- A heavy burden is placed on the reviewer to both imagine how the code works AND identify flaws in its construction.

- Specialized views of code changes can make flaws much more obvious.

- Runtime code analysis can collect information about code that makes these views feasible.

What kind of views, you ask? Well, the ideal view depends on the need. Here, there is a ton of thought, research and experimentation that needs to be done. But let’s take a simple example.

This particular Forem bug was caused by a query which did not have a filter condition. The query should have read: Find all enabled mobile notifications for a particular user. But instead it read: Find all enabled mobile notifications. The clause to limit the query results to a particular user was missing. That’s why I (and perhaps you) received errant notifications. The query found other people’s notifications and sent them to you.

So what information could we present to a code reviewer that would have caught their attention? This bug was introduced in a big PR - 132 commits touching 103 files. So to catch this flaw, we would need a view that really makes this mistake pop out.

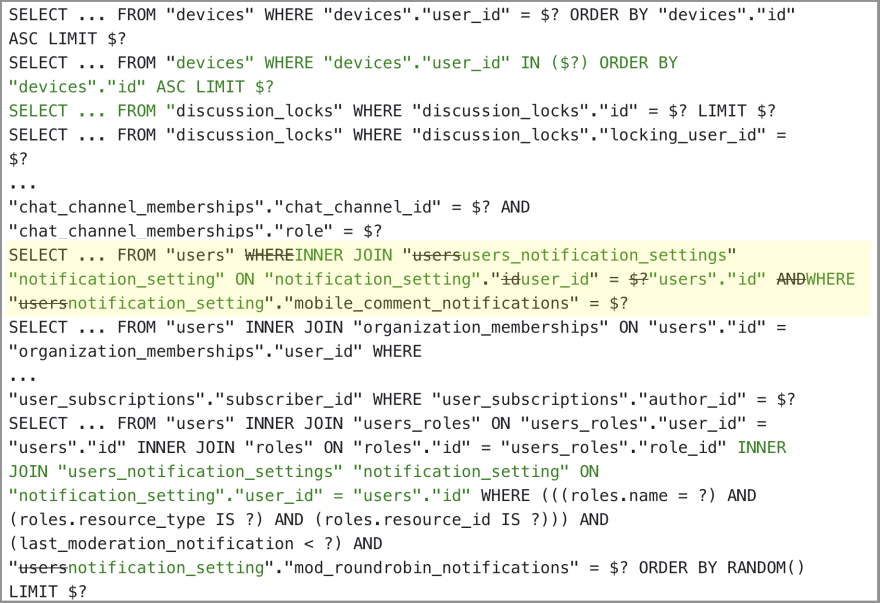

The first thing I tried was just to list all the queries across the different code versions and compare them. But there were a lot of query changes, and the signal was lost in the noise. So I made a few optimizations:

- Remove all the columns from

SELECTclauses (SELECTis not relevant to filtering). - Compress any sequence of IN clause placeholders like

( $?, $?, $? )to a single placeholder( $? )(there’s no loss of query intent). - Switch from “line” diff (Git style) to “word” diff.

Now the resulting diff is actually pretty small, considering the magnitude of the code changes and the size of the PR. Do you think you could spot this problematic query change in this type of a SQL diff view?

To take it further, and catch other types of issues, how about tracking and reviewing:

- Queries that have no LIMIT clause.

- Queries that join more than four tables.

- Queries that have more than four expressions in the WHERE clause.

- Queries that have no filter condition on a user, group, role or organization.

You get where I’m going. These are likely trouble spots… and this is just SQL. Runtime code analysis can also correlate SQL with context like web services routes, key functions, calls to other web services, cloud services, etc. There are many possibilities to explore.

So hearing all this, maybe you’d like to implement this kind of analysis for your own repos! Stay tuned for a follow-up post in which I will go step by step through the tools, data, and code that I used to generate this post. I’ll show you how I used AppMap, an open source tool I created, which stores runtime code analysis data as JSON.

PS For an even more in-depth description of the issue from the Dev.to team, check out yesterday’s post Incident Retro: Failing Comment Creation + Erroneous Push Notifications.