To access the latest features keep your code editor plug-in up to date.

-

Docs

-

Reference

- AppMap for Visual Studio Code

- AppMap for JetBrains

- AppMap Agent for Ruby

- AppMap Agent for Python

- AppMap Agent for Java

- AppMap Agent for Node.js

- AppMap for Java - Maven Plugin

- AppMap for Java - Gradle Plugin

- AppMap Command line interface (CLI)

- Remote recording API

- Analysis Labels

- Analysis Rules

- License Key Installation

- Subscription Management

- AppMap Offline Install for Secure Environments

- Uninstalling AppMap

Advanced AppMap Data Management- Using AppMap Diagrams

- Navigating Code Objects

- Exporting AppMap Diagrams

- Handling Large AppMap Diagrams

- Reading SQL in AppMap Diagrams

- Refining AppMap Data

- Generating OpenAPI Definitions

- Using AppMap Analysis

- Reverse Engineering

- Record AppMap Data in Kubernetes

Integrations- Community

AppMap Navie AI Quickstart

- Choose your AI Provider (Optional)

- Open AppMap Navie AI

- Ask Navie about your App

- Improve Navie AI Responses with AppMap Data

- Next Steps

Choose your AI Provider (Optional)

By default, Navie uses the GitHub Copilot LLM.

If you aren’t using Copilot, or if you would like to use your own LLM API key or local LLM, you can use a variety of other AI model providers such as OpenAI, Anthropic, Gemini, Fireworks.ai, LM Studio, and more. View the instructions for Choosing an LLM Provider for more details.

Open AppMap Navie AI

After you complete the installation of AppMap for your code editor. Open the Navie Chat Window to ask Navie about your application.

To open the Navie Chat, open the AppMap plugin in the sidebar menu for your code editor, and select the

New Navie Chatoption.

Ask Navie about your App

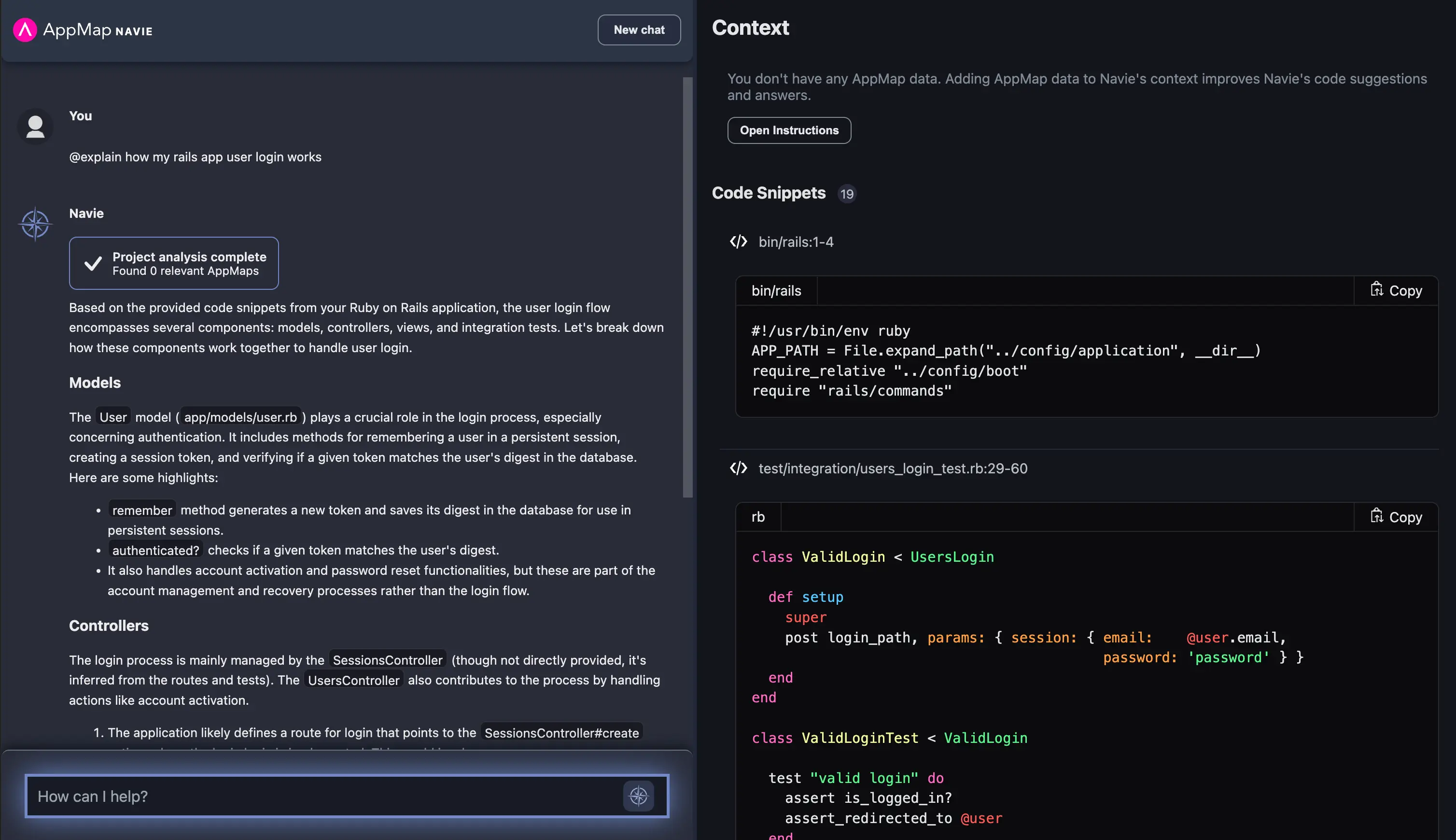

You can ask questions about your application with Navie immediately after installing the plugin. Navie will answer questions based on analysis of your project code. For increased accuracy of more complex projects, you can record AppMap data and Navie will utilize this information as well.

When you ask a question to Navie, it will search through all the available AppMap data for your project to pull in relevant traces, sequence diagrams, and code snippets for analysis. It will send the selected context to your preferred LLM provider.

To achieve the highest quality results, we suggest using the available command modes when prompting Navie. Simply type

@into the chat input to access the list of available command modes.By default, Navie chat is in a default mode called

@explain. Other specialized modes are available for generating diagrams, planning work, generating code and tests, and more. Consult Navie commands documentation for more details.The Navie UI includes a standard chat window, and a context panel which will include all the context that is included in the query to the AI provider. This context can include things such as:

Always available:

- Code Snippets

- Pinned Content

Note: Only code files and other files that are tracked by Git will be included as context and sent to the LLM when you ask a question. AppMap Navie will respect your

.gitignoreand will not access files which are not tracked or ignored by Git (such as passwords or sensitive data).If AppMap Data exists:

- Sequence Diagrams

- HTTP Requests

- SQL Queries

- Other I/O Data

Navie will look for the files listed above in the following locations:

- The currently open project

- All workspace folders in Visual Studio Code

- All modules available in JetBrains IDEs

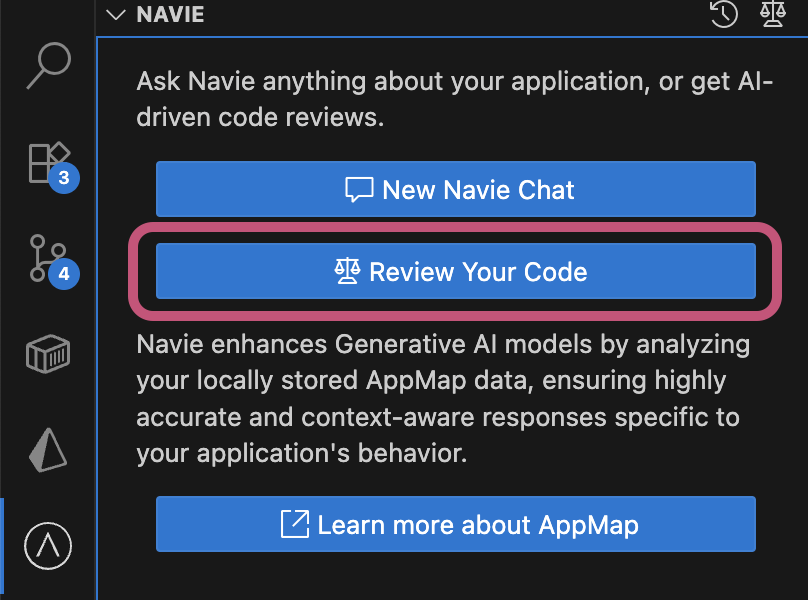

Review Your Code

You can also use Navie to review your code. This is a great way to get an overview of your codebase, identify potential issues, and improve the quality of your code. Navie uses the same types of data to perform code review as it does for answering questions, including code snippets, sequence diagrams, traces, and other I/O data.

To start a code review, click the

Review Your Codebutton in the Navie sidebar. This will generate a list of suggested improvements for your codebase, including:

- Potential bugs

- SQL query optimizations

- HTTP request optimizations

- Security vulnerabilities

- Performance improvements

- Code design improvements and anti-patterns

… and more. Because Navie uses AppMap Data, it can provide more accurate and relevant suggestions than other code review tools.

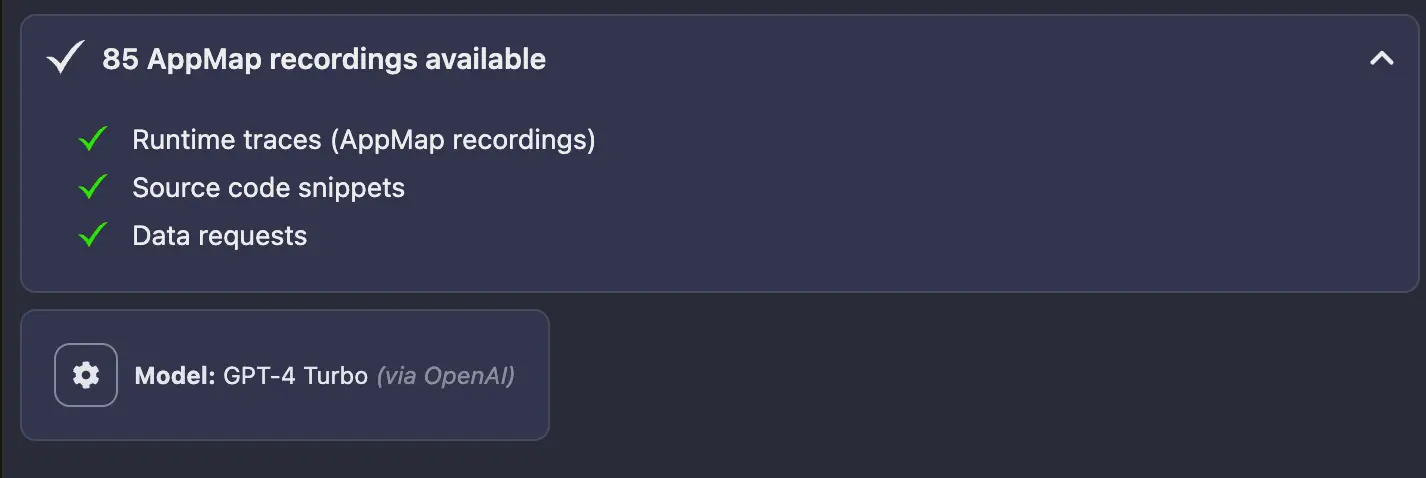

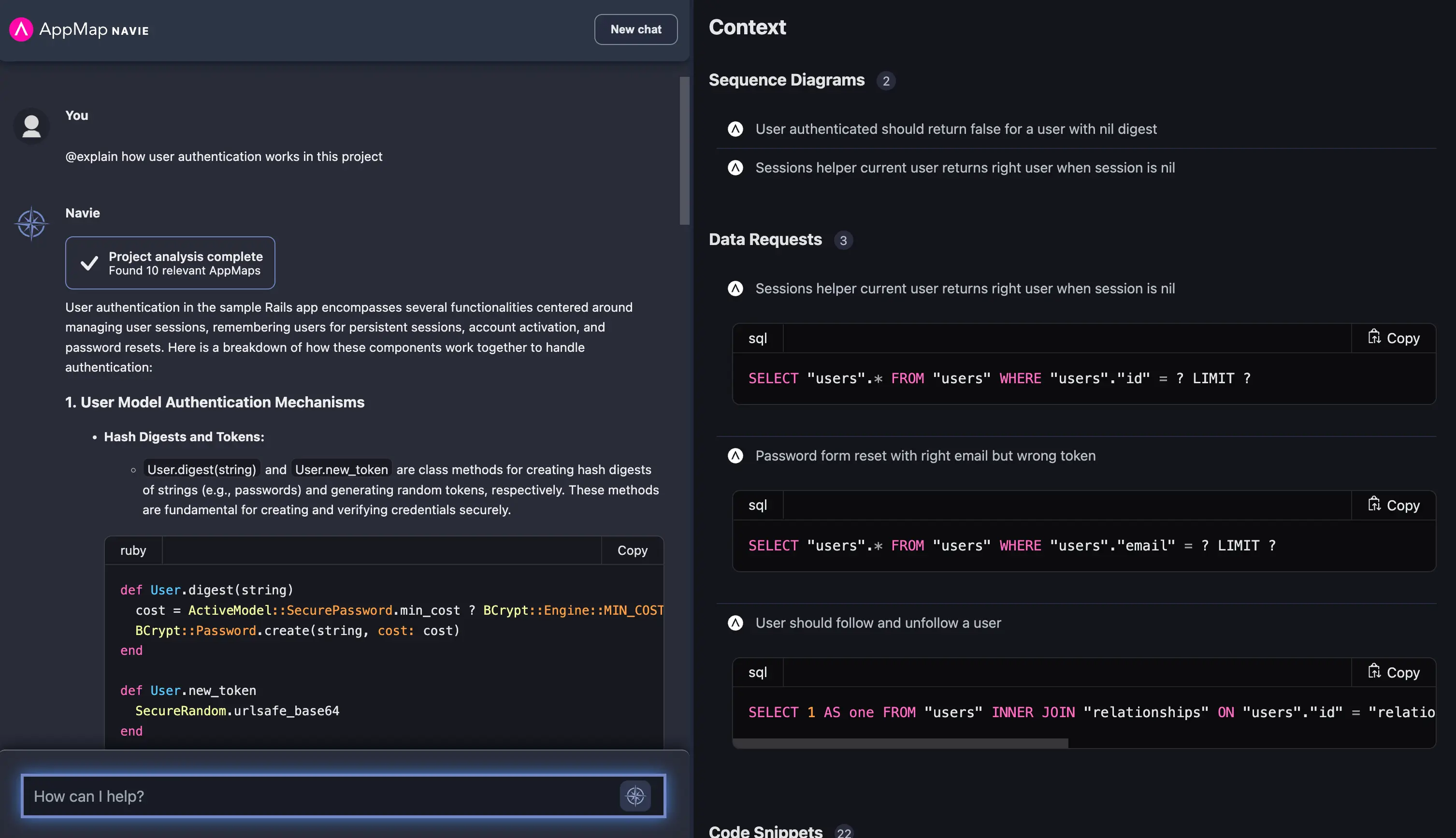

Improve Navie AI Responses with AppMap Data

Generate AppMap Data and you will greatly improve the quality of your Navie AI responses. With AppMap Data for your project, you can now ask much deeper architectural questions about your application. This is possible because of the additional context from AppMap Data and the higher accuracy and relevance of the code snippets which are relevant to your question.

View the Navie AI examples page to see some examples of Navie fixing complex architectural issues, performance issues, and adding new features to your application.

After your AppMap Data is generated, the Navie window will indicate the AppMap Data that exists for your project.

With this AppMap Data in your project, asking questions to Navie will now include data flows, sequence diagrams, traces, in addition to the relevant code snippets for the project.

Next Steps

Continue to ask questions to Navie, creating new code for your application, and continue to generate additional AppMap Data as your code changes. On each subsequent question asked, Navie will re-query your AppMap Data, traces, data flows, and source code for your project to power the context for the answer.

Learn more about making AppMap Data to improve Navie response accuracy

Thank you for your feedback!