To access the latest features keep your code editor plug-in up to date.

-

Docs

-

Reference

- AppMap for Visual Studio Code

- AppMap for JetBrains

- AppMap Agent for Ruby

- AppMap Agent for Python

- AppMap Agent for Java

- AppMap Agent for Node.js

- AppMap for Java - Maven Plugin

- AppMap for Java - Gradle Plugin

- Command line interface (CLI)

- Remote recording API

- Analysis Labels

- Analysis Rules

- License Key Installation

- Subscription Management

- AppMap Offline Install for Secure Environments

- Uninstalling AppMap

Advanced AppMap Data Management- Using AppMap Diagrams

- Navigating Code Objects

- Exporting AppMap Diagrams

- Handling Large AppMap Diagrams

- Reading SQL in AppMap Diagrams

- Refining AppMap Data

- Generating OpenAPI Definitions

- Using AppMap Analysis

- Reverse Engineering

- Record AppMap Data in Kubernetes

Integrations- Community

Bring Your Own Model Examples

- GitHub Copilot Language Model

- Google Gemini

- OpenAI

- Anthropic (Claude)

- Azure OpenAI

- AnyScale Endpoints

- Fireworks AI

- Ollama

- LM Studio

GitHub Copilot Language Model

Starting with VS Code

1.91and greater, and with an active GitHub Copilot subscription, you can use Navie with the Copilot Language Model as a supported backend model. This allows you to leverage the powerful runtime powered Navie AI Architect with your existing Copilot subscription. This is the recommended option for users in corporate environments where Copilot is the only approved and supported language model.Requirements

The following items are required to use the GitHub Copilot Language Model with Navie:

- VS Code Version

1.91or greater - AppMap Extension version

v0.123.0or greater - GitHub Copilot VS Code extension must be installed

- Signed into an active paid or trial GitHub Copilot subscription

Setup

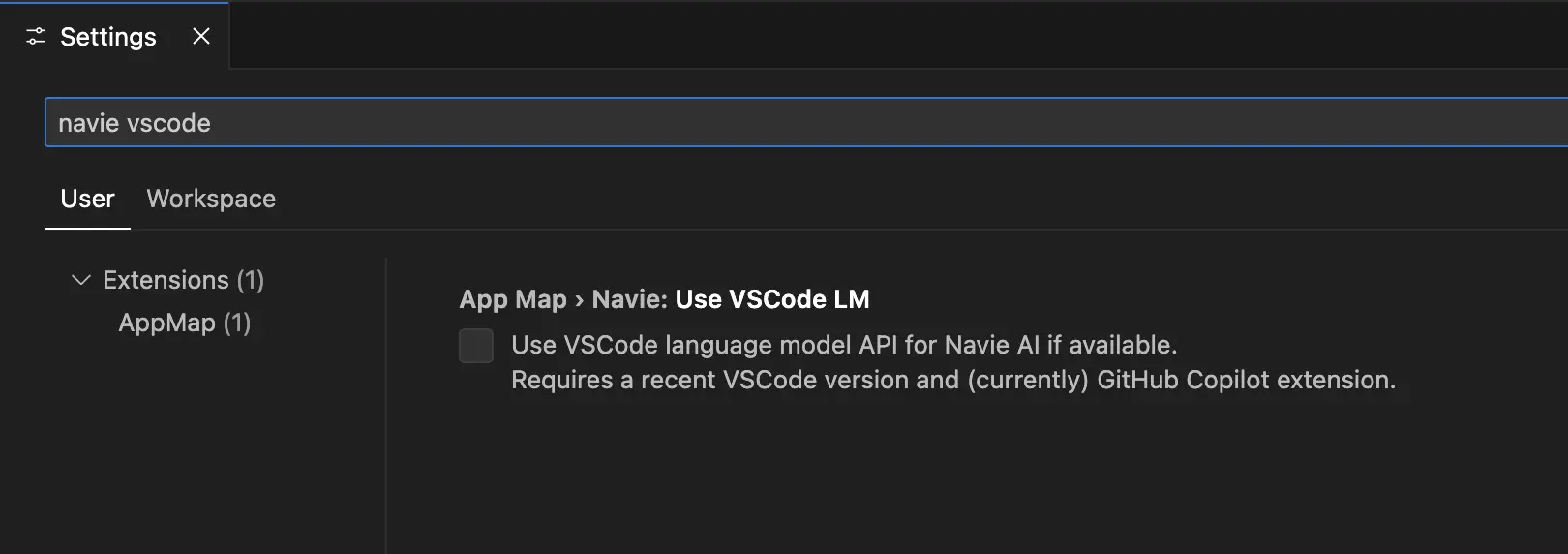

Open the VS Code Settings, and search for

navie vscode

Click the box to use the

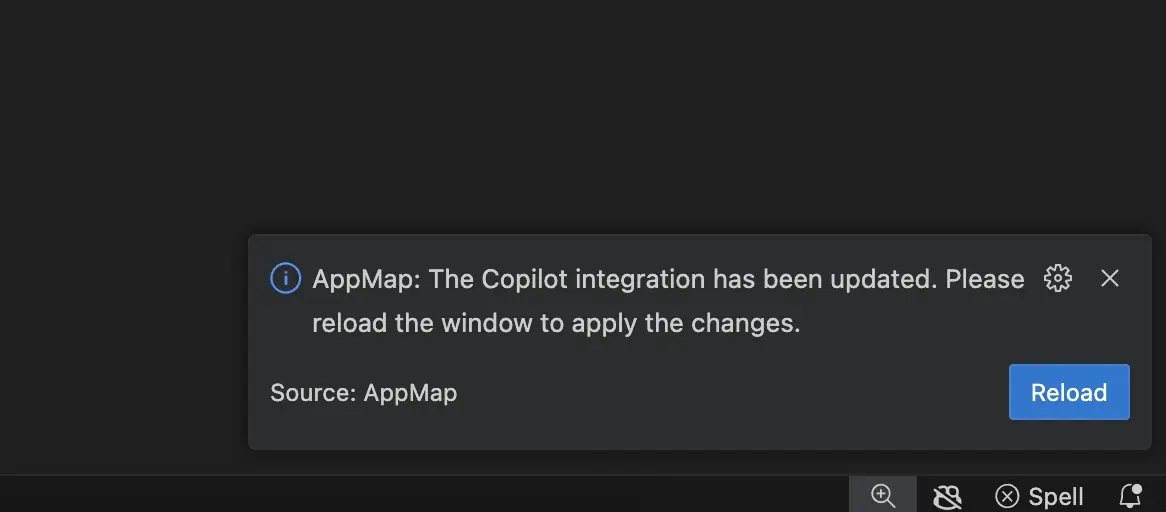

VS Code language model...After clicking the box to enable the VS Code LM, you’ll be instructed to reload your VS Code to enable these changes.

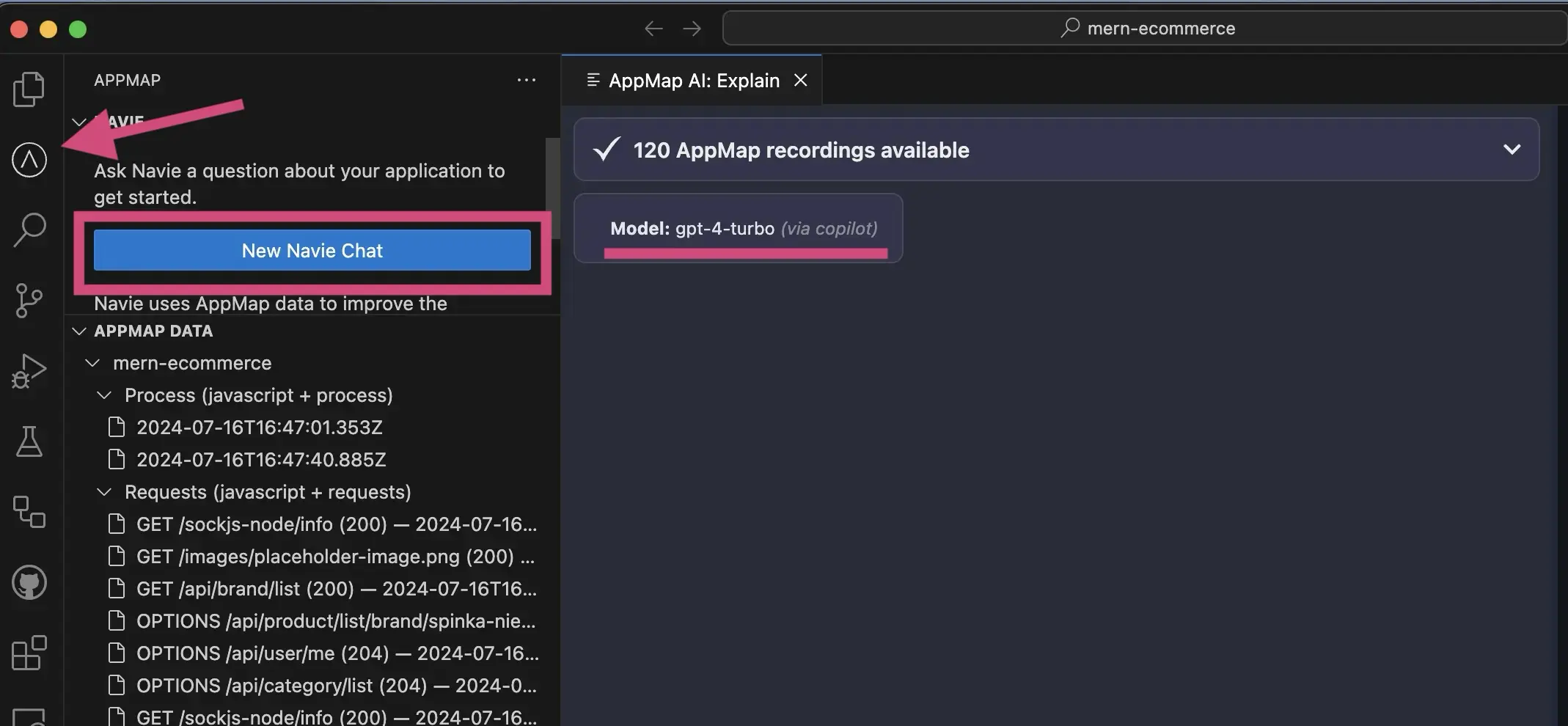

After VS Code finishes reloading, open the AppMap extension.

Select

New Navie Chat, and confirm the model listed is(via copilot)

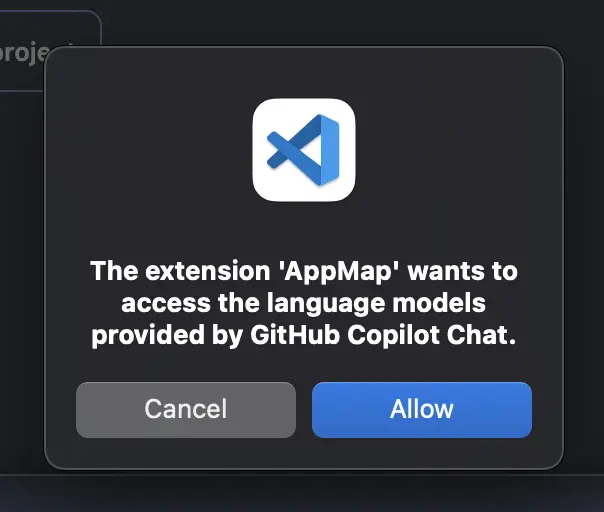

You’ll need to allow the AppMap extension access to the Copilot Language Models. After asking your first question to Navie, click

Allowto the popup to allow the necessary access.

Troubleshooting

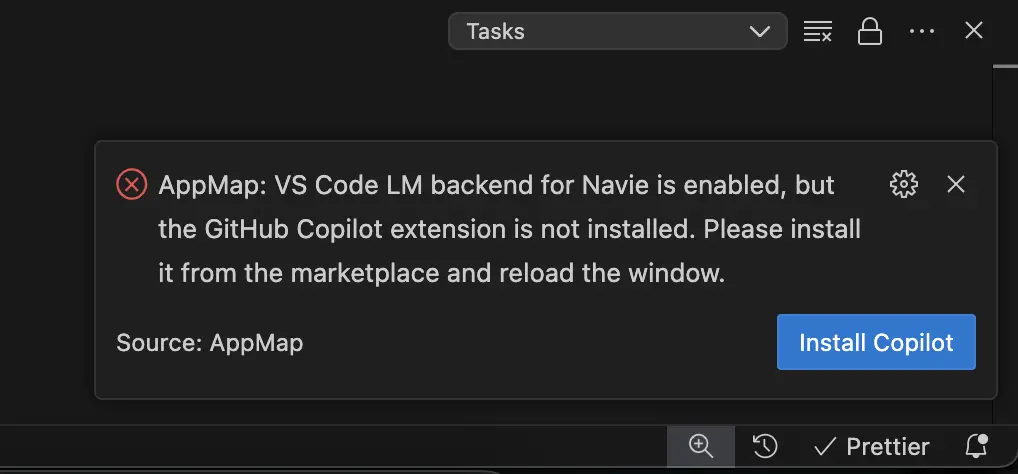

If you attempt to enable the Copilot language models without the Copilot Extension installed, you’ll see the following error in your code editor.

Click

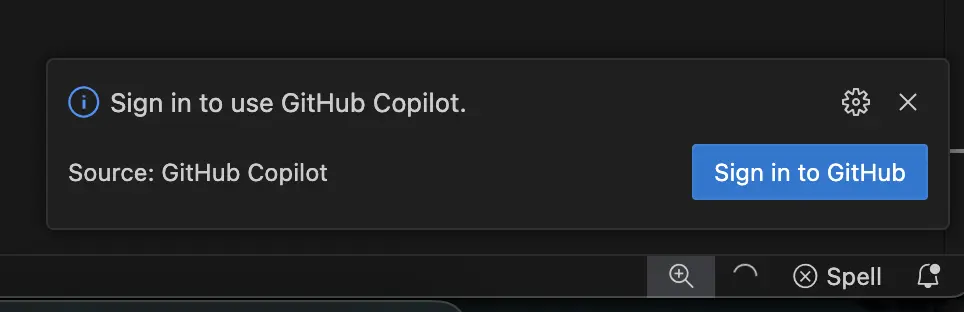

Install Copilotto complete the installation for language model support.If you have the Copilot extension installed, but have not signed in, you’ll see the following notice.

Click the

Sign in to GitHuband login with an account that has a valid paid or trial GitHub Copilot subscription.Changing the Copilot Language Model

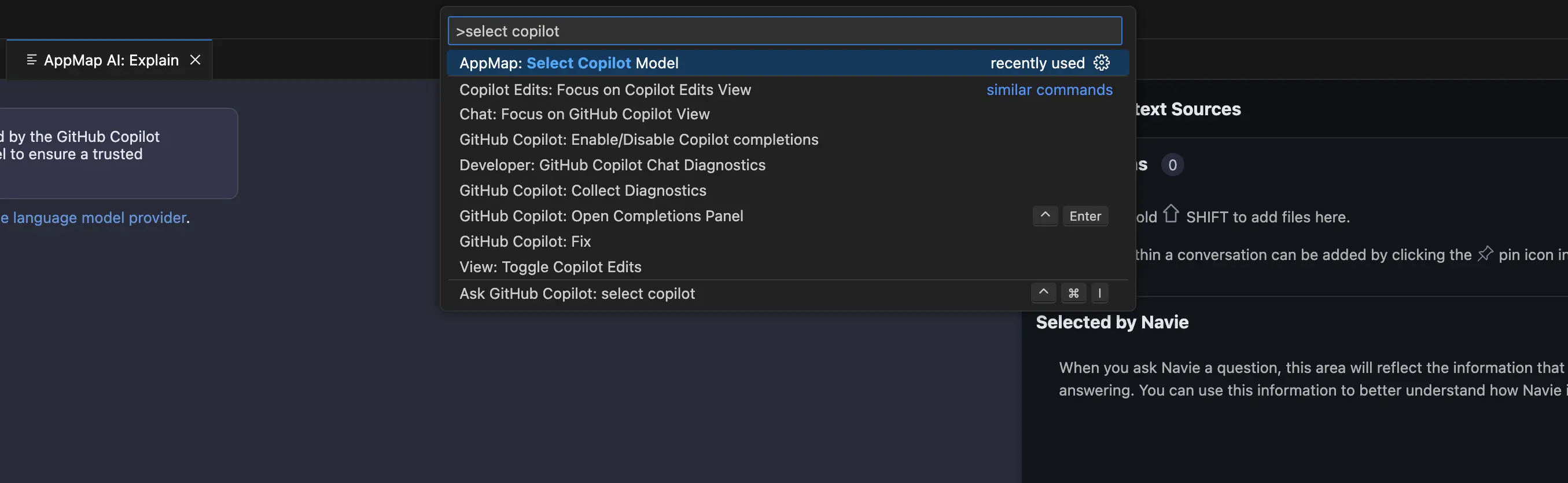

GitHub Copilot supports a variety of different language models. Use the VS Code command “AppMap: Select Copilot Model” in the command palette.

To open the Command Palette.

You can use a hotkey to open the VS Code Command Palette

- Mac:

Cmd + Shift + P - Windows/Linux:

Ctrl + Shift + P

Search for

AppMap: Select Copilot Modelin the command palette.

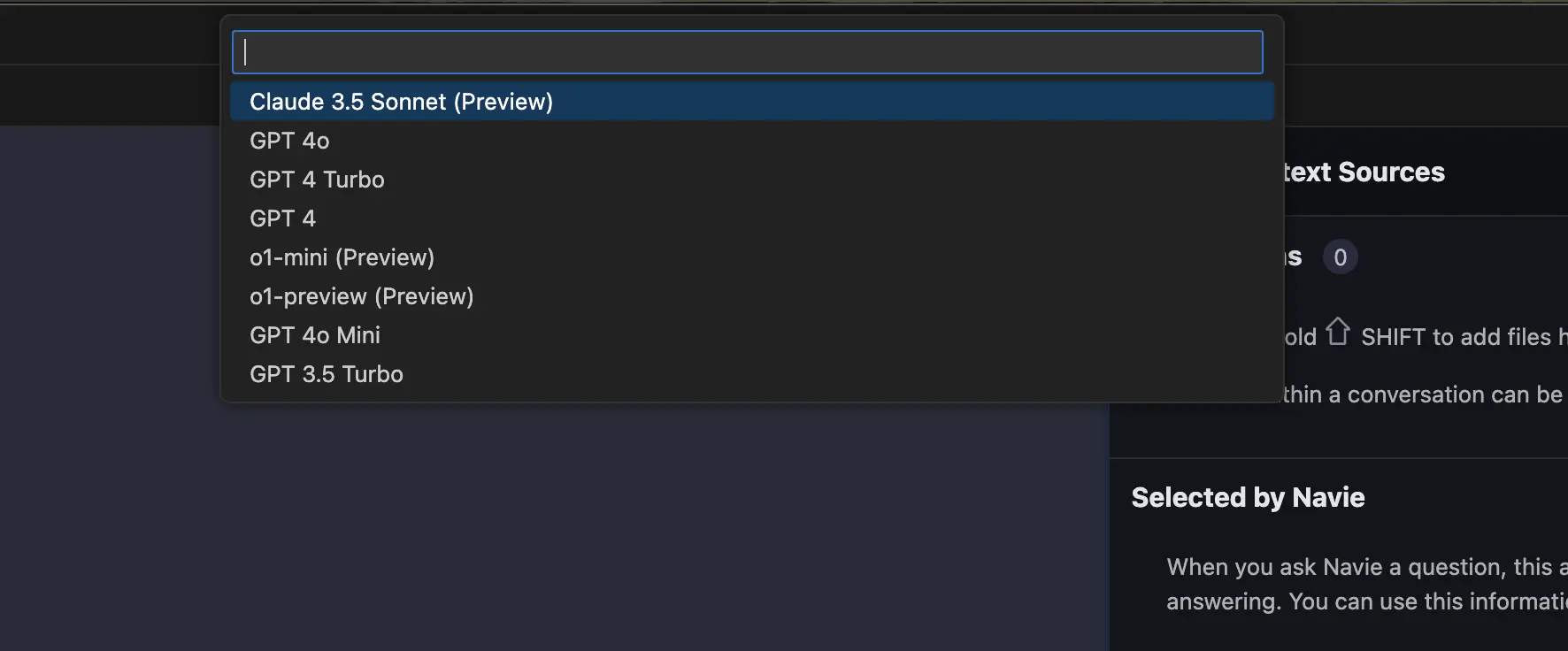

Then select the specific model you’d like to use with AppMap Navie

Video Demo

Google Gemini

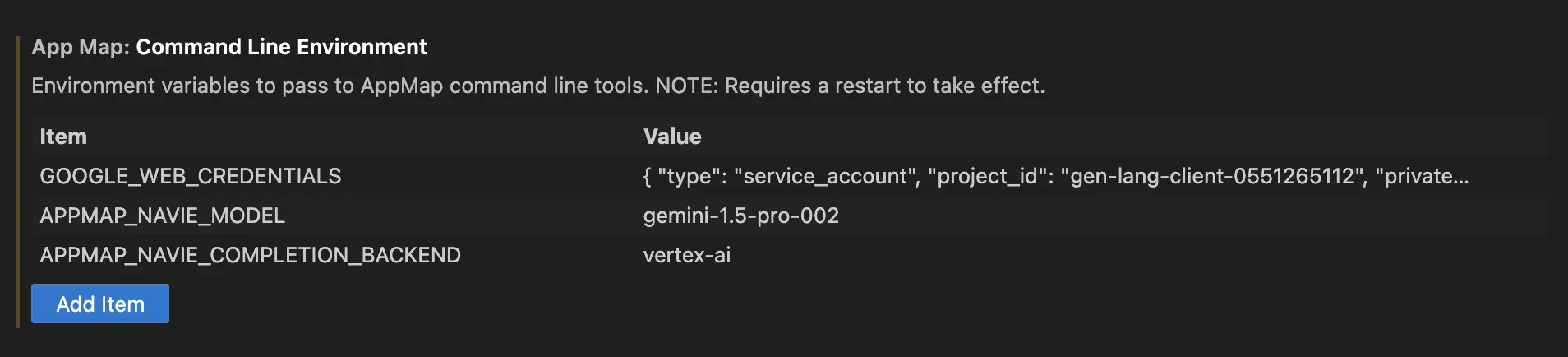

After configuring your Google Cloud authentication keys and ensuring you have access to the Google Gemini services on your Google Cloud account, configure the following environment variables in your VS Code editor. Refer to the Navie documentation for more details on where to set the Navie environment variables.

GOOGLE_WEB_CREDENTIALS[contents of downloaded JSON]APPMAP_NAVIE_MODELgemini-1.5-pro-002APPMAP_NAVIE_COMPLETION_BACKENDvertex-ai

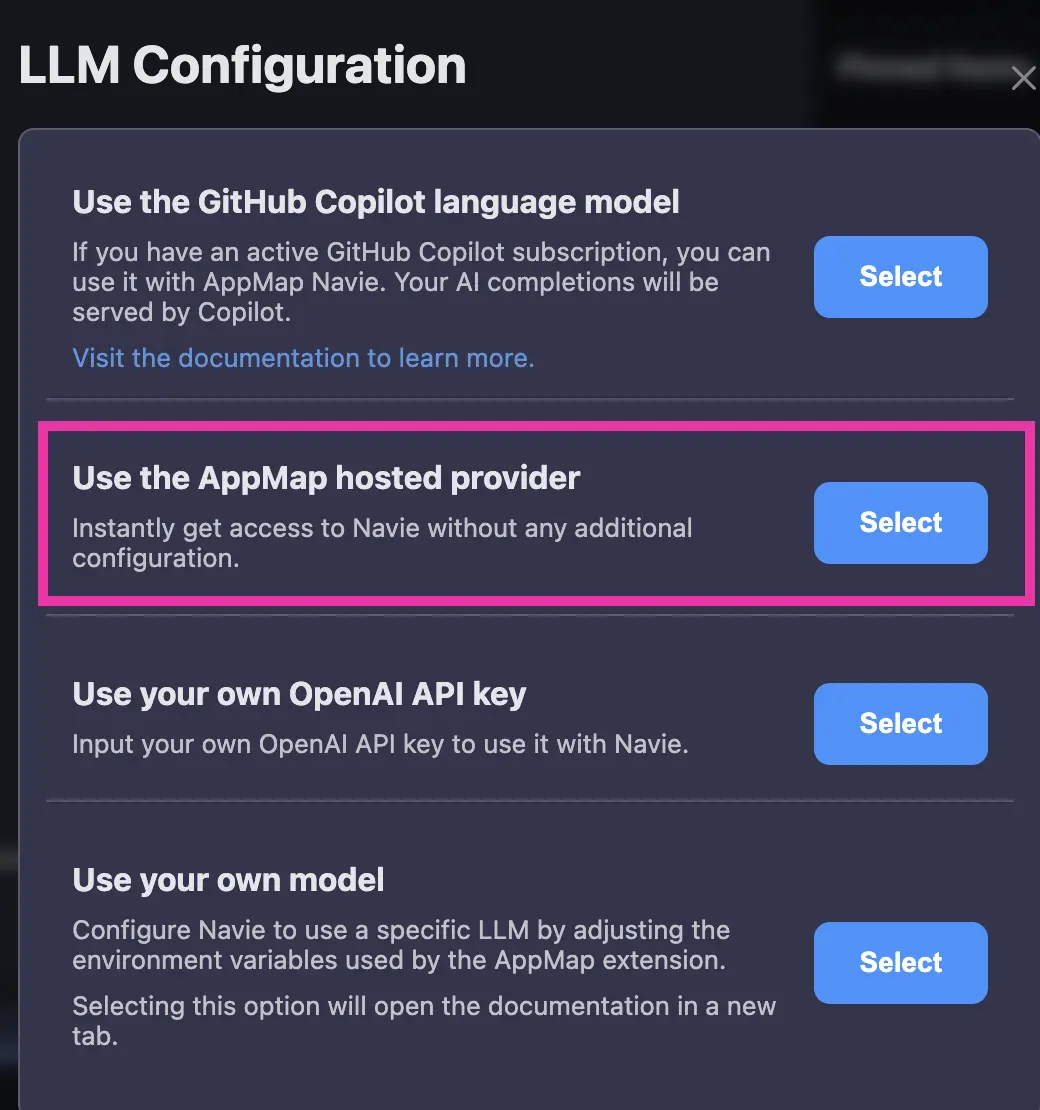

NOTE: If your code editor previously used the default GitHub Copilot backend, open the “gear” icon in the Navie chat window to reset the language model setting to use the environment variables instead by selecting “Use AppMap Hosted Provider”. This will disable the GitHub Copilot Language Model backend and will by default use your environment variable configuration.

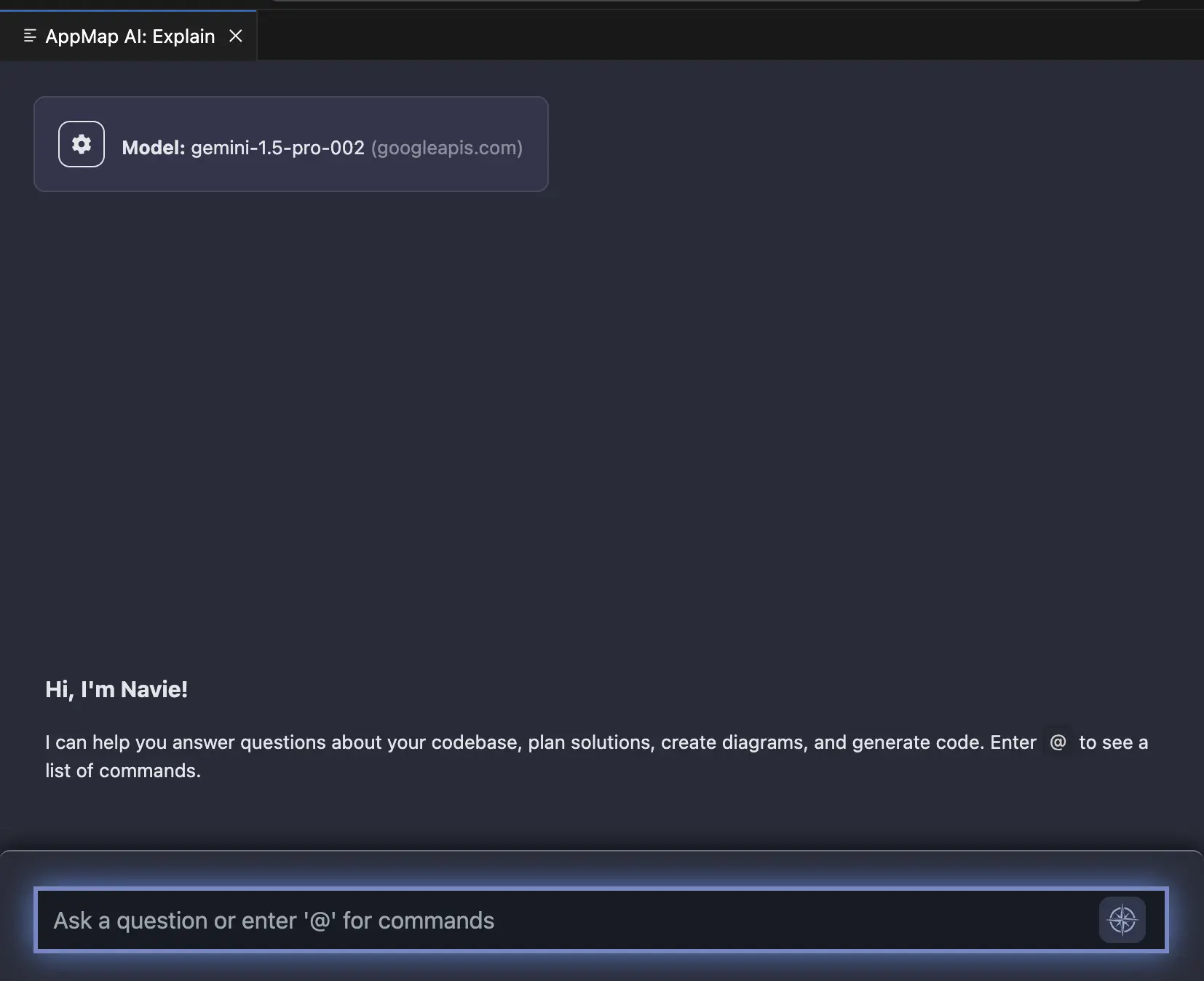

You can confirm your model and API endpoint after making this change in the Navie chat window, which will display the currently configured language model backend.

OpenAI

Note: We recommend configuring your OpenAI key using the code editor extension. Follow the Bring Your Own Key docs for instructions. The configuration options below are for advanced users.

Only

OPENAI_API_KEYneeds to be set, other settings can stay default:OPENAI_API_KEYsk-9spQsnE3X7myFHnjgNKKgIcGAdaIG78I3HZB4DFDWQGMWhen using your own OpenAI API key, you can also modify the OpenAI model for Navie to use. For example if you wanted to use

gpt-3.5or use an preview model likegpt-4-vision-preview.APPMAP_NAVIE_MODELgpt-4-vision-previewAnthropic (Claude)

AppMap supports the Anthropic suite of large language models such as Claude Sonnet or Claude Opus.

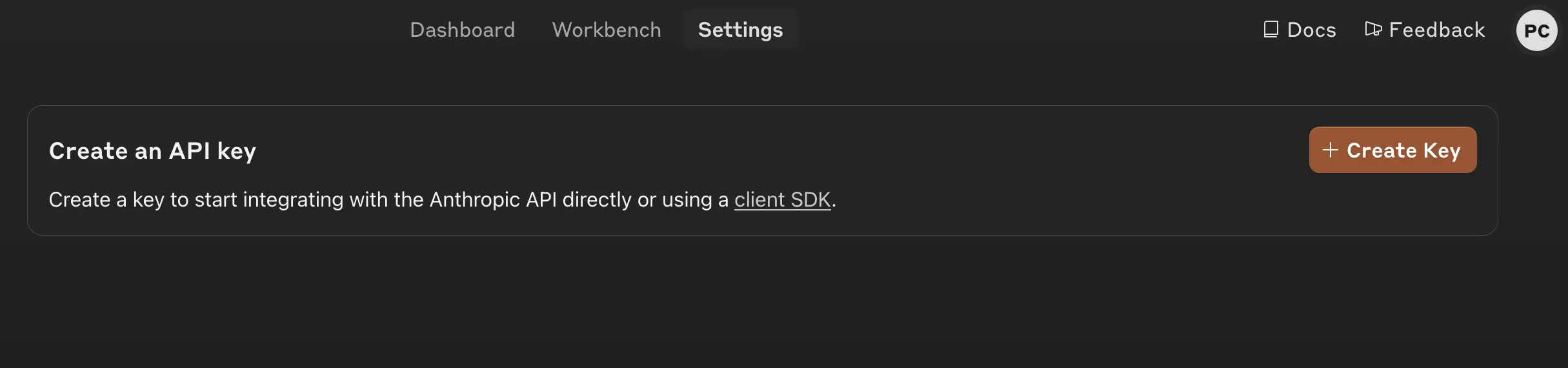

To use AppMap Navie with Anthropic LLMs you need to generate an API key for your account.

Login to your Anthropic dashboard, and choose the option to “Get API Keys”

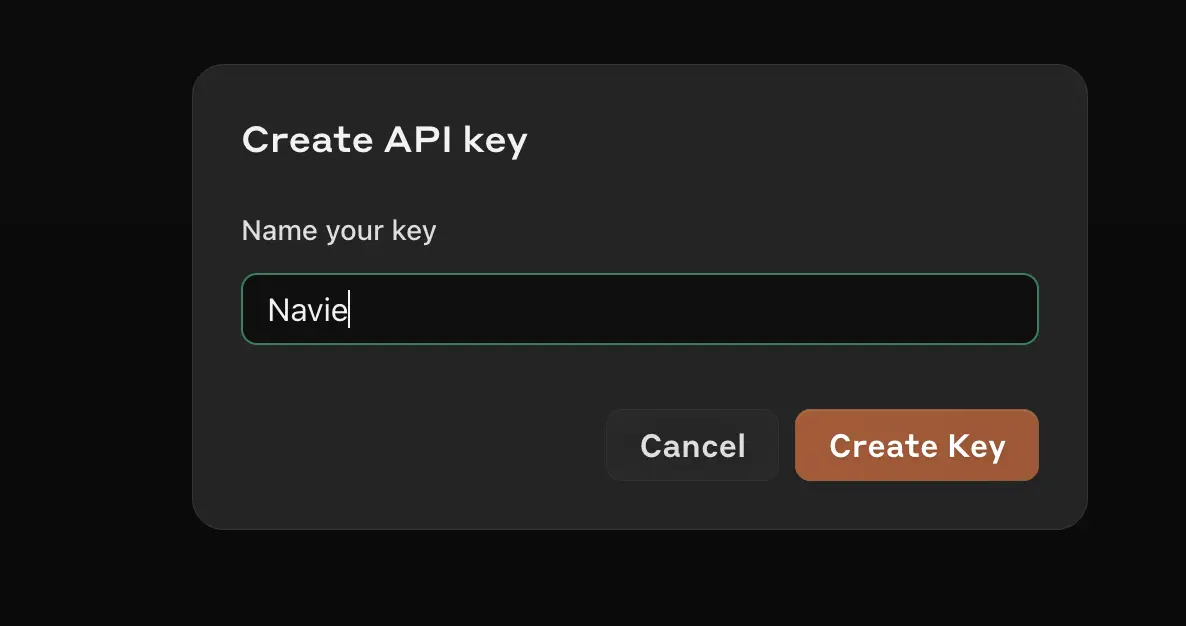

Click the box to “Create Key”

In the next box, give your key an easy to recognize name.

In your VS Code or JetBrains editor, configure the following environment variables. For more details on configuring these environment variables in your VS Code or JetBrains editor, refer to the AppMap BOYK documentation.

ANTHROPIC_API_KEYsk-ant-api03-8SgtgQrGB0vTSsB_DeeIZHvDrfmrgAPPMAP_NAVIE_MODELclaude-3-5-sonnet-20240620When setting the

APPMAP_NAVIE_MODELrefer to the Anthropic documentation for the latest available models to chose from.Video Demo

Azure OpenAI

Assuming you created a

navieGPT-4 deployment oncontoso.openai.azure.comOpenAI instance:AZURE_OPENAI_API_KEYe50edc22e83f01802893d654c4268c4fAZURE_OPENAI_API_VERSION2024-02-01AZURE_OPENAI_API_INSTANCE_NAMEcontosoAZURE_OPENAI_API_DEPLOYMENT_NAMEnavieAnyScale Endpoints

AnyScale Endpoints allows querying a selection of open-source LLMs. After you create an account you can use it by setting:

OPENAI_API_KEYesecret_myxfwgl1iinbz9q5hkexemk8f4xhcou8OPENAI_BASE_URLhttps://api.endpoints.anyscale.com/v1APPMAP_NAVIE_MODELmistralai/Mixtral-8x7B-Instruct-v0.1Consult AnyScale documentation for model names. Note we recommend using Mixtral models with Navie.

Anyscale Demo with VS Code

Anyscale Demo with JetBrains

Fireworks AI

You can use Fireworks AI and their serverless or on-demand models as a compatible backend for AppMap Navie AI.

After creating an account on Fireworks AI you can configure your Navie environment settings:

OPENAI_API_KEYWBYq2mKlK8I16ha21k233k2EwzGAJy3e0CLmtNZadJ6byfpu7cOPENAI_BASE_URLhttps://api.fireworks.ai/inference/v1APPMAP_NAVIE_MODELaccounts/fireworks/models/mixtral-8x22b-instructConsult the Fireworks AI documentation for a full list of the available models they currently support.

Video Demo

Ollama

You can use Ollama to run Navie with local models; after you’ve successfully ran a model with

ollama runcommand, you can configure Navie to use it:OPENAI_API_KEYdummyOPENAI_BASE_URLhttp://127.0.0.1:11434/v1APPMAP_NAVIE_MODELmixtralNote: Even though it’s running locally a dummy placeholder API key is still required.

LM Studio

You can use LM Studio to run Navie with local models.

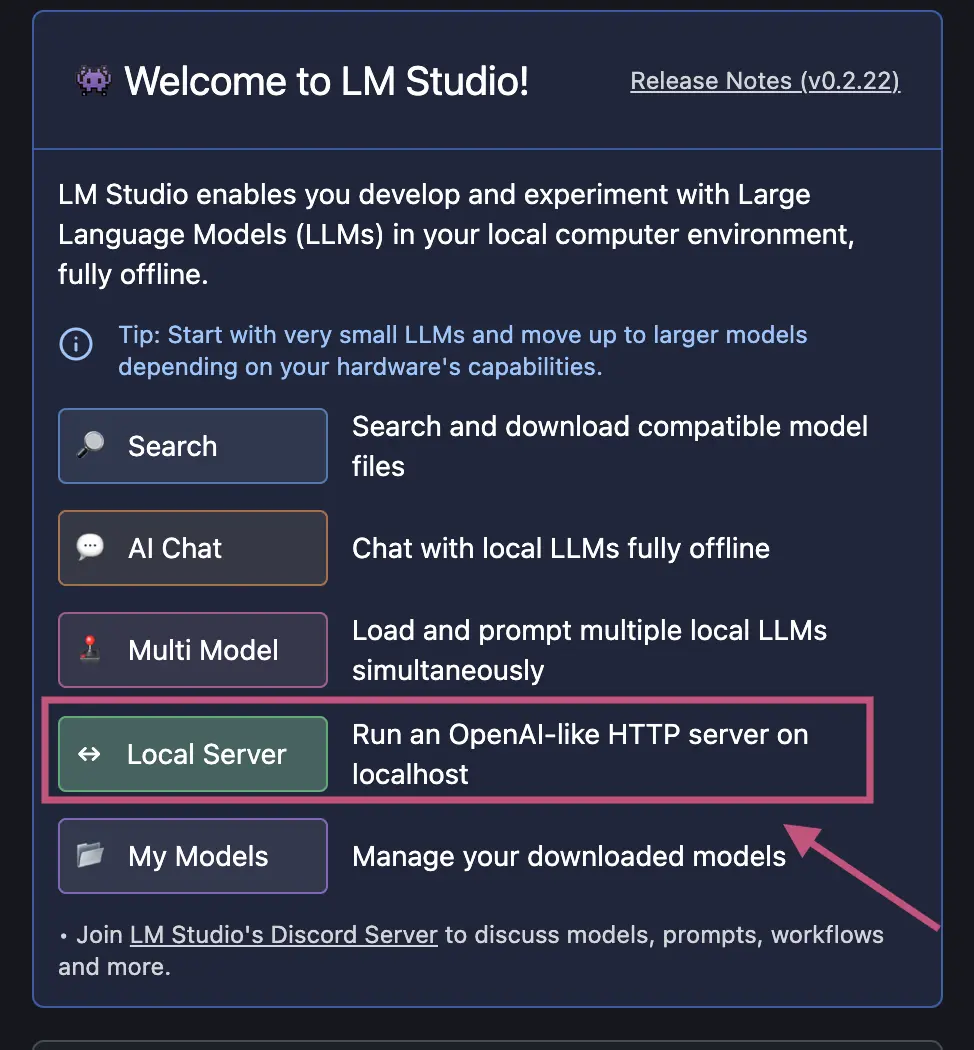

After downloading a model to run, select the option to run a local server.

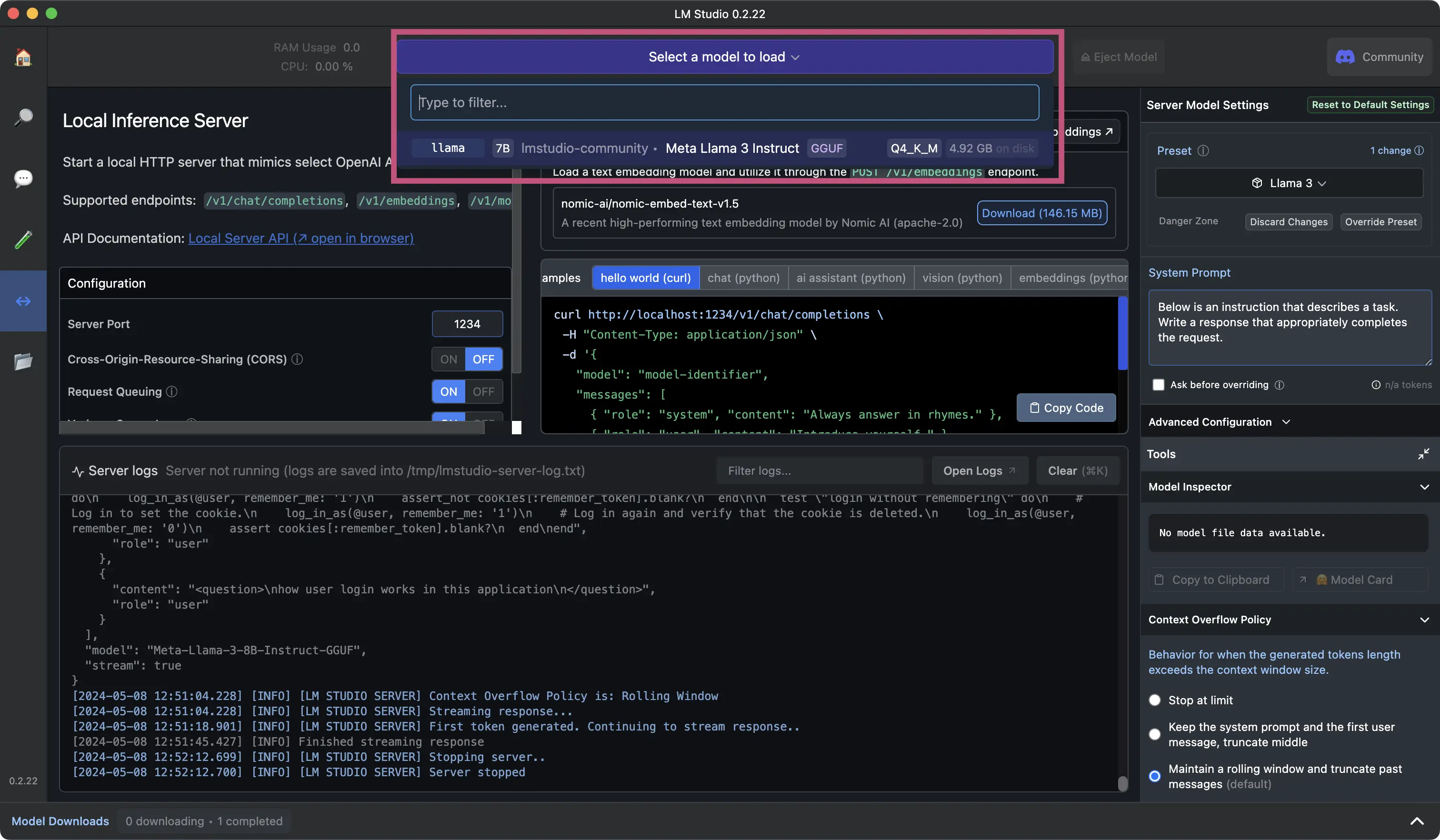

In the next window, select which model you want to load into the local inference server.

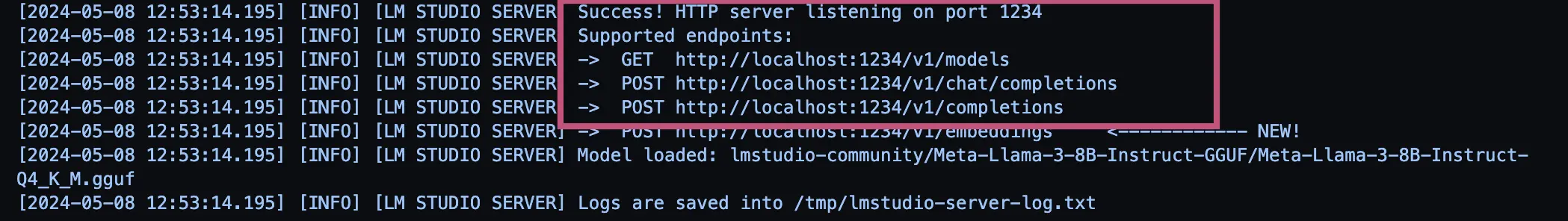

After loading your model, you can confirm it’s successfully running in the logs.

NOTE: Save the URL it’s running under to use for

OPENAI_BASE_URLenvironment variable.For example:

http://localhost:1234/v1

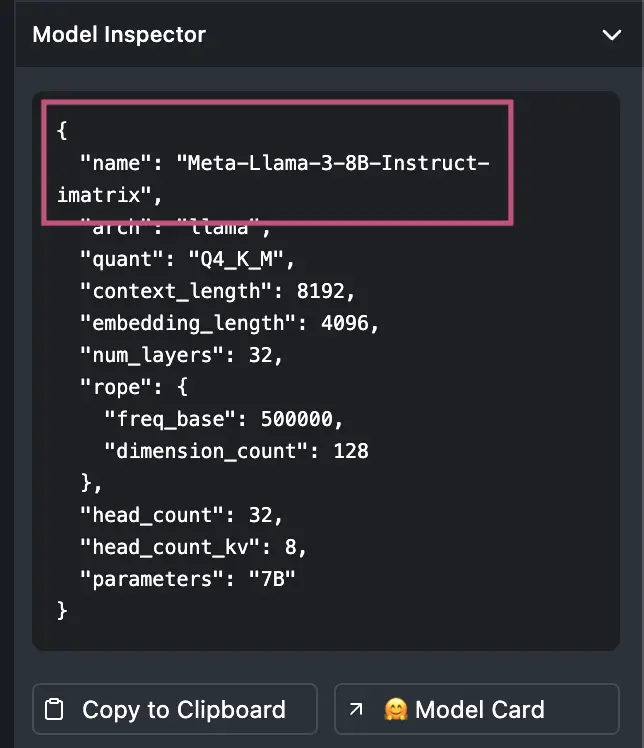

In the

Model Inspectorcopy the name of the model and use this for theAPPMAP_NAVIE_MODELenvironment variable.For example:

Meta-Llama-3-8B-Instruct-imatrix

Continue to configure your local environment with the following environment variables based on your LM Studio configuration. Refer to the documentation above for steps specific to your code editor.

OPENAI_API_KEYdummyOPENAI_BASE_URLhttp://localhost:1234/v1APPMAP_NAVIE_MODELMeta-Llama-3-8B-Instruct-imatrixNote: Even though it’s running locally a dummy placeholder API key is still required.

Thank you for your feedback!