To access the latest features keep your code editor plug-in up to date.

-

Docs

-

Reference

- AppMap for Visual Studio Code

- AppMap for JetBrains

- AppMap Agent for Ruby

- AppMap Agent for Python

- AppMap Agent for Java

- AppMap Agent for Node.js

- AppMap for Java - Maven Plugin

- AppMap for Java - Gradle Plugin

- Command line interface (CLI)

- Remote recording API

- Analysis Labels

- Analysis Rules

- License Key Installation

- Subscription Management

- AppMap Offline Install for Secure Environments

- Uninstalling AppMap

Advanced AppMap Data Management- Using AppMap Diagrams

- Navigating Code Objects

- Exporting AppMap Diagrams

- Handling Large AppMap Diagrams

- Reading SQL in AppMap Diagrams

- Refining AppMap Data

- Generating OpenAPI Definitions

- Using AppMap Analysis

- Reverse Engineering

- Record AppMap Data in Kubernetes

Integrations- Community

User Interface

AppMap Navie AI is an AI assistant that enables you to ask architectural level questions about your code base. Navie is also able to help you generate new code that can span across your entire project. The primary user interface for Navie is within your VS Code or JetBrains code editor. Refer to the Navie docs to learn how to open Navie for your code editor.

Basic Layout

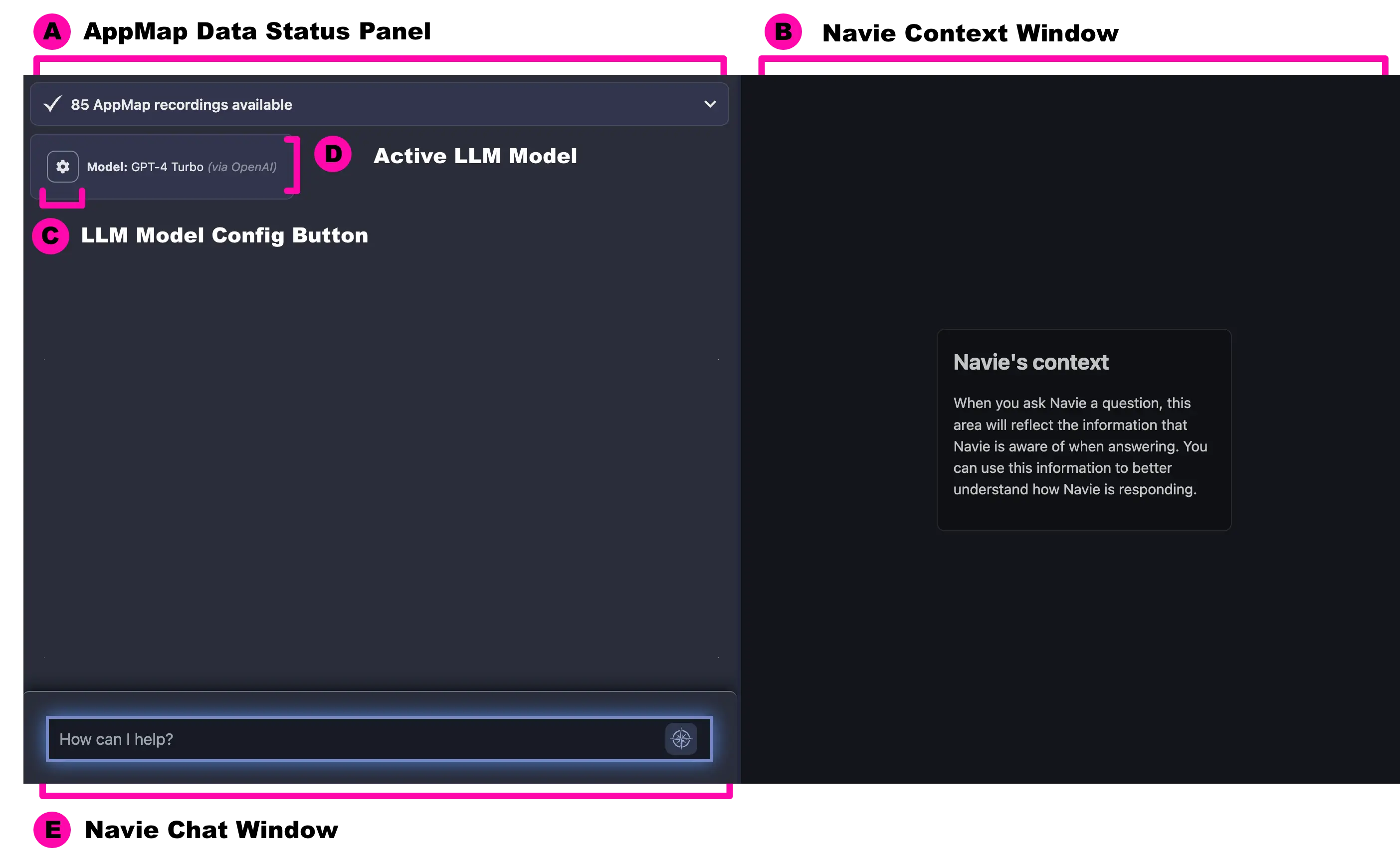

The Navie user interface consists of 5 key areas.

- AppMap Data Status Panel This panel will list the current status of the AppMap Data that has been created for your project. AppMap Data are your application runtime traces which include things like HTTP and SQL requests, data flows, class and function level instrumentation, and more. Without AppMap Data created for your project, Navie can search through your static source code in your workspace for relevant code snippets.

- Navie Context Window Upon asking a question to Navie it will search through your AppMap Data (if exists) and the source code in your open workspace to locate all the relevant context for your question. This area will reflect the information that Navie is aware of when answering. You can use this information to better understand how Navie is responding.

- LLM Model Config Button You can configure Navie to use your own OpenAI API key or bring your own LLM model running locally or within another provider. Clicking the gear icon will open the configuration modal for the bring your own model settings. Refer to the AppMap docs for more details about how to configure Navie to use your own LLM models.

- Active LLM Model This panel will display the currently configured LLM model in use with Navie. The LLM details are displayed in the following format:

- Navie Chat Window This is your primary location for interacting with Navie. Here you can ask Navie questions about how your application works, ask Navie to generate code or test cases, and you can even have Navie create a pull request based on your changes. To learn more about specific Navie commands refer to the Navie Commands section.

Model: <Model Name> (<location>). When using the default Navie backend, the location will be listed asdefault. When using your own OpenAI API key the location will be listed asvia OpenAI. When using Navie Bring Your Own Model , the location will be the value of theOPENAI_BASE_URLenvironment variable, for examplevia localhostwhen using Ollama or LM Studio.

Thank you for your feedback!